Cin: Similarly, and to my personal opinion, the complex and sometimes conflicting relationship between AI and sustainability also requires our careful consideration. Even though I must admit that, in her keynote, Jenny Ambukiyenyi Onya brought a very positive story on data and sustainable economics, talking about Halisi Livestock which uses advanced AI and biometrics to help farmers and financial institutions improve capital access for underserved populations. As such transforming the agri-fintech landscape in Africa.

Data science is everywhere in the news these days, especially with the rise of AI. Is it purely a technical story, or does it go beyond that?

Cin: Technology and AI in particular undoubtedly improves productivity and efficiency, especially for repetitive or dangerous or filthy tasks. However, the focus should shift towards leveraging AI to augment human capabilities, not to replace them. Imagine us becoming ‘superhumans,’ with technology enhancing our thinking and acting. Think about AI-powered implants that can enable people to hear again or walk after paralysis. In this respect, I would like to share a personal recommendation and favorite of mine: "The Digital Dilemma." A documentary series on VRT MAX by journalist Tim Verheyden, exploring the impact of technological innovation on people and society.

What is the added value of women in data science?

Cin: Our brain works differently, and diversity of thought is crucial in data science and business translation. People from different backgrounds bring unique perspectives that can lead to innovative solutions. At KBC, a high number of data scientists are women. This success is due to a combination of factors, including the STEM educational initiatives, but also intrinsic strong math skills and academic achievements often found in women, as well as their potential for different approaches.

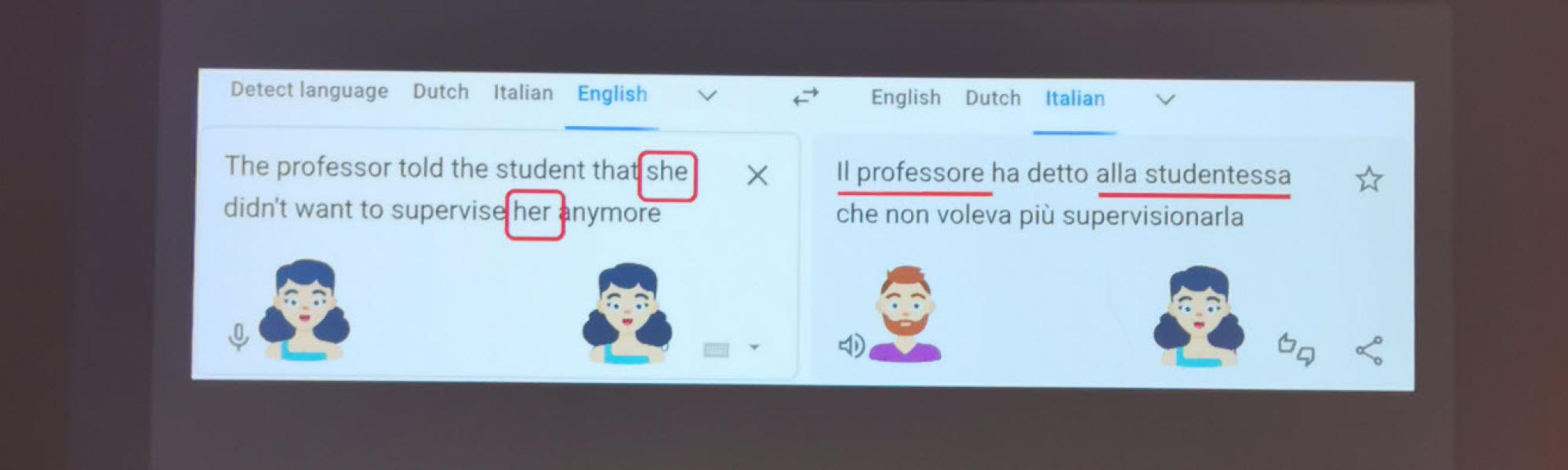

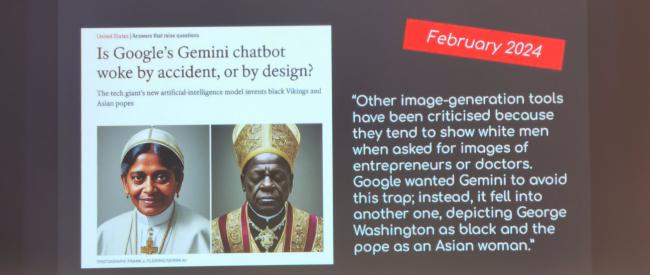

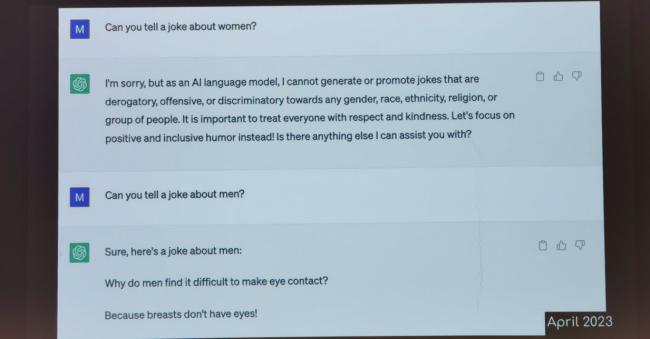

Rossana: A more diverse group will always deliver more diverse solutions. We are now focusing on women, but it also applies to other minorities such as other nationalities, ages, or people with a disability. For me, it is a classic chicken and egg situation, or a catch 22 if you want. We need more women with different backgrounds and mindsets in data science to reduce bias, but the lack of diversity might discourage some women from entering the field. Conferences like the one in Ghent can play a crucial role in raising awareness and breaking those boundaries.

Do you have more insights to share with us?

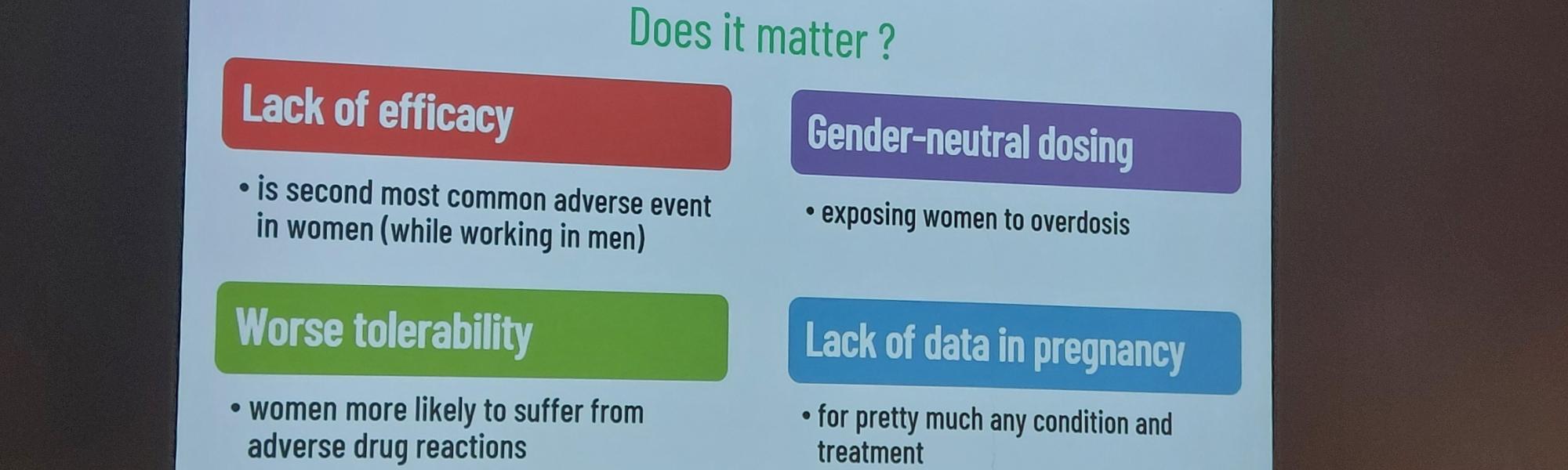

Cin: What struck me was the session on the invisibility of female data in scientific research. It highlighted a critical issue, illustrating what the lack of female data in scientific research can cause. Problems such as ineffective medication or adverse drug reactions, exposing women to overdosing. Even the lack of data during pregnancy, often motivated by the desire to avoid risks, leaving women with limited options for managing various health conditions during their pregnancy. So, let us please close the gap.